19/10/22’ Lateworks

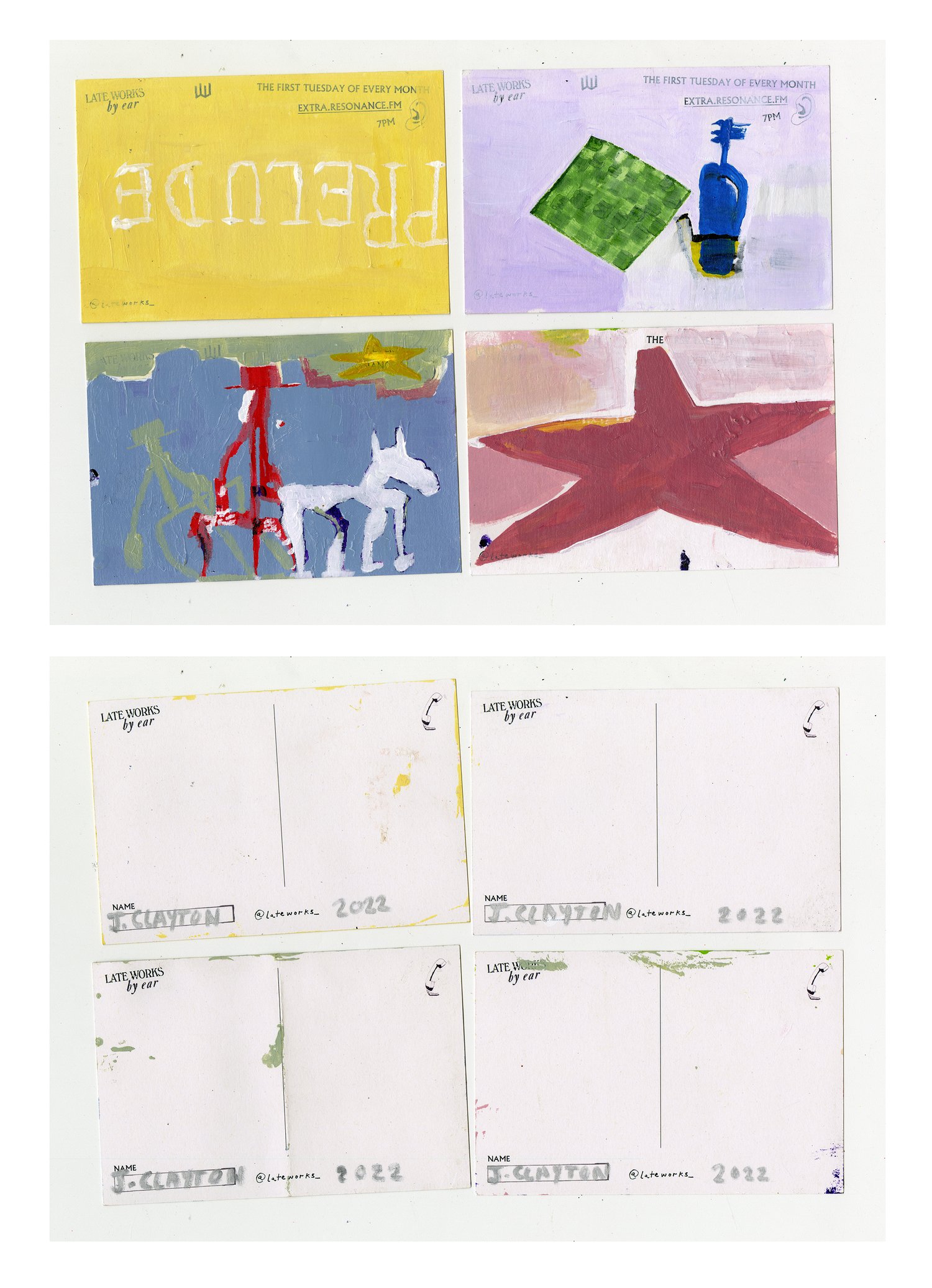

x4 (A6) Acrylic on card. Postcards made as a contribution to the November edition of Joe Bradley-Hill’s monthly ‘Lateworks’ radio show (resonance.fm)

This is a rolling project in which visual artists are invited to visually respond to the current month’s broadcast, which itself is a curation of musical interpretations of the previous artist’s postcards. Works are mailed to the artist and then mailed back to Lateworks in a process of the audible and the visual influencing each other in an indefinite chain.

There is something in this process of translation between two modes of communication that perpetuates a constant rediscovery. The chain of musical and visual responses maintains that, although I, myself, did not listen to many of the broadcasts, the work I make in the present can be logically traced back to decisions made and works produced at the beginning of the series (2018).

Lateworks November radio show aired on the 1st, track-list in response to the works is as follows:

Cornelius Cardew - Red Flag Prelude

Erik Satie - Prelude du Rideau Rouge

Late Works, Fen Trio, Gal Go - loamier and loamier (at first sight performance 3)

Laurie Anderson - For Electronic Dogs / Structuralist Filmmaking / Drums

Lou Harrison - Simfony No.13

Late Works, Fen Trio, Gal Go - gooseprints (at first sight performance 2)

Laurie Anderson - Walk the Dog

Bryan Jacobs - Nonaah with Balloon and Air Compressor

Elvis - Flaming Star

Enzo Minarelli - Neotonemes for Bells and Rustles (Audio Arts: Volum e 6 No 4, Side B)

Blues for Jake Leigh Hobba (Audio Arts: Volume 6 No 4, Side B)

Neil Pedder (Audio Arts: Volume 6 No 4, Side B)

23/10/22’ (Communication with Tom Baldwin / AKA Prod Sandman about possible Audio/ Visual collaborative work)

“Tom

Following our conversation earlier I am getting down some of the general ideas for our collaboration. This is to be an audio/ visual work specifically designed for a live event, for which I will produce an interactive sculpture designed to trigger audible distortions, and progressive shifts in a long-form soundscape, which you will produce specifically for this purpose of interaction.

By incorporating distortion pedals, sampling machines, and interactive lighting, we aim to design a system of limited controls, which invites an audience to make spontaneous interactions with the sculptural and translates these to shape a live audio output. This output will be considered as the collaborative fruit of the project, and we intend to record this as a final composition.

We have agreed that certain limitations will be necessary in order to cap the capacity for distortion and direct a certain output without infringing on creative potential. The strategies we have discussed are in the realm of volume caps, and delayed action on the controllers. We have agreed that we want to prevent any changes made by participants from being immediate. We want to maintain a certain distance between the actions and reactions of the system, and to try to maintain harmony in the musical progression of the piece. Programmed triggers will be designed to put the system between the participants and the output.

Expanding on the musician/ instrument relationship, sculptural works will perform the role of mediator between multiple untrained participants and a spontaneous audio output. My practice meditates on entropy through acts of translation and recording so I am interested in producing a work that is perhaps inseminated with certain shortfalls or failures – this could be in the form of hidden or disguised actions. Materials and processes I am interested in working with are foams, plastics, construction materials, and Lazer cutting.

We have briefly discussed general themes and aesthetic directions which borrow from Sci-fi soundtracking, such as Vangelis’ Blade Runner soundtrack (1982) as well as visual references from the Warhammer universe. There is a certain melodic, dystopian influence that we envision in the experience of this work which serves as a starting point for experimentation.

Going forward I suggest that we define the specific audio technologies that we would like to use so that we can start making tests around how the soundscape can be interacted with as well as possible forms and requirements of the sculptural work(s). Once we have defined our parameters, we will have a space to play.

Let's try to maintain a back and forth in this form of letter writing, like you suggested, so we can track the progression of the project! Maybe you could respond with some thoughts on the starting composition and some possible directions for distortion and how keyboards/ samplers/ pedals could be used to trigger changes in the soundscape?”

x4 (A6) Acrylic on card. Postcards made as a contribution to the November edition of Joe Bradley-Hill’s monthly ‘Lateworks’ radio show (resonance.fm)

This is a rolling project in which visual artists are invited to visually respond to the current month’s broadcast, which itself is a curation of musical interpretations of the previous artist’s postcards. Works are mailed to the artist and then mailed back to Lateworks in a process of the audible and the visual influencing each other in an indefinite chain.

There is something in this process of translation between two modes of communication that perpetuates a constant rediscovery. The chain of musical and visual responses maintains that, although I, myself, did not listen to many of the broadcasts, the work I make in the present can be logically traced back to decisions made and works produced at the beginning of the series (2018).

Lateworks November radio show aired on the 1st, track-list in response to the works is as follows:

Cornelius Cardew - Red Flag Prelude

Erik Satie - Prelude du Rideau Rouge

Late Works, Fen Trio, Gal Go - loamier and loamier (at first sight performance 3)

Laurie Anderson - For Electronic Dogs / Structuralist Filmmaking / Drums

Lou Harrison - Simfony No.13

Late Works, Fen Trio, Gal Go - gooseprints (at first sight performance 2)

Laurie Anderson - Walk the Dog

Bryan Jacobs - Nonaah with Balloon and Air Compressor

Elvis - Flaming Star

Enzo Minarelli - Neotonemes for Bells and Rustles (Audio Arts: Volum e 6 No 4, Side B)

Blues for Jake Leigh Hobba (Audio Arts: Volume 6 No 4, Side B)

Neil Pedder (Audio Arts: Volume 6 No 4, Side B)

23/10/22’ (Communication with Tom Baldwin / AKA Prod Sandman about possible Audio/ Visual collaborative work)

“Tom

Following our conversation earlier I am getting down some of the general ideas for our collaboration. This is to be an audio/ visual work specifically designed for a live event, for which I will produce an interactive sculpture designed to trigger audible distortions, and progressive shifts in a long-form soundscape, which you will produce specifically for this purpose of interaction.

By incorporating distortion pedals, sampling machines, and interactive lighting, we aim to design a system of limited controls, which invites an audience to make spontaneous interactions with the sculptural and translates these to shape a live audio output. This output will be considered as the collaborative fruit of the project, and we intend to record this as a final composition.

We have agreed that certain limitations will be necessary in order to cap the capacity for distortion and direct a certain output without infringing on creative potential. The strategies we have discussed are in the realm of volume caps, and delayed action on the controllers. We have agreed that we want to prevent any changes made by participants from being immediate. We want to maintain a certain distance between the actions and reactions of the system, and to try to maintain harmony in the musical progression of the piece. Programmed triggers will be designed to put the system between the participants and the output.

Expanding on the musician/ instrument relationship, sculptural works will perform the role of mediator between multiple untrained participants and a spontaneous audio output. My practice meditates on entropy through acts of translation and recording so I am interested in producing a work that is perhaps inseminated with certain shortfalls or failures – this could be in the form of hidden or disguised actions. Materials and processes I am interested in working with are foams, plastics, construction materials, and Lazer cutting.

We have briefly discussed general themes and aesthetic directions which borrow from Sci-fi soundtracking, such as Vangelis’ Blade Runner soundtrack (1982) as well as visual references from the Warhammer universe. There is a certain melodic, dystopian influence that we envision in the experience of this work which serves as a starting point for experimentation.

Going forward I suggest that we define the specific audio technologies that we would like to use so that we can start making tests around how the soundscape can be interacted with as well as possible forms and requirements of the sculptural work(s). Once we have defined our parameters, we will have a space to play.

Let's try to maintain a back and forth in this form of letter writing, like you suggested, so we can track the progression of the project! Maybe you could respond with some thoughts on the starting composition and some possible directions for distortion and how keyboards/ samplers/ pedals could be used to trigger changes in the soundscape?”

09/11/22’ (Communication with Tom Baldwin / AKA Prod Sandman about possible Audio/ Visual collaborative work) 2

Hey Jacob,

Just been catching up with whoever I can over the last few weeks around ideas for how we would pull this off! As previously mentioned I'm super comfortable with the sonic side of things, where things get tricky are the technical side of things so please see below for what I've come up with so far!

Sampling machine (launching various sonic elements) - to pull this off we'd basically need a launchpad, in the interest of it not getting too expensive I'de recommend something like this. Oddly enough I think it really fits the aesthetic and I am happy to invest in one, you could then possibly create an enclosure to house it and make it fit the aesthetic mentioned in your previous email! In order for things not to be sudden in terms of change I am going to drench the channel in reverb and assign slightly different patterns to each button if that makes sense, I've included a mini excel depiction of what this could look like but when we get round to the project I'm thinking give or take 25 patterns in total (however with my suggestion below we could have up to 64) with each button launching a different sonic element.

Let me know what you think of this and I can find out the measurements ect for you so you can brainstorm ideas on the visual front.

Distortion and general sonic change - so as discussed at work the other day the knobs on a keyboard work perfectly for this, we would essentially only been using the knobs on the right of the keybaord pictured below and assigning them to various fx and with 8 different controllable parts we can ensure that we have plenty of change and movement throughout, we can even assign them to different elements discussed above (melodies, chords ect) I've also included an example below of the kind of thing we could manipulate but based on the brief I think we should stick to physcadellic FX such as reverb, delay, overdrive, phaser, flanger, chorus or or even work in entire guitar FX racks which in the interest of keeping things cheaper I can run this through my laptop, as youve mentioned before all of this can be limited so no one messes it up to the point where it goes out of the orignal brief.

(This will take up alot of CPU which is fine, but it does mean that we will have to house my laptop in something where it can remain on charge to avoid any issues.

Lighting ect

So this is where things get really difficult and I've come to the conclusion that we would basically need some outside help to pull it off, we would need to build something in Max for live to react to something outside of the digital audio workstation, so how do we do this? I've been given some contact details to reach out to as we would basically need to design this ourselves, I'll keep on asking around uni and see if anyone is a wizard with it but there might be a chance someone on your course would be able to help if there's an AV element to your masters.

Would you be able to ask around if anyone is comfortable programming max for live with lights? Maybe other students or even lecturers? I am also happy to reach out on a forum but this part is basically really complex and takes some serious expertise, not saying we can't pull it off, I'm saying we will need someone else's help!

10/12/22’ Soundscape produced by Tom Baldwin for WIP exhibition.

This is a recording of a live Ableton performance with interchangeable elements. After a lot of different ideas and figuring out what’s possible, me and tom worked on some ideas together and ended on the idea of a very sparse, soundscape free of rhythm or drum elements. The idea was to have a floating space in which soundbites / samples could be triggered in a live setting. The base layer was built up of recordings from an airport lounge.

The poem that I wrote, which also served as a statement for the installation, was recorded from an A.I voice generator as well as in Tom’s voice which was then distorted live through several changeable filters altering pitch. These samples, as well as samples from a collection of videogames (including Half-Life 1 & 2), and other movie samples which inspired the work (Blade runner, and recordings of Shakespeare's Hamlet’) where triggered by Tom during the show and bounced around the room with multidirectional speakers so that different elements could be heard from different positions.

I asked Tom to play the poem sample every 10 minutes like a mantra, and if a new individual or group entered the show, he would target samples at them as they walked around the installation.

Having immersive sound as part of the installation really helped create a world. As you stepped through the door you were instantly aware of being in a new and it provided an extra catalyst which brought the works and the written materials together. Going forward, I have spoken to friends who know coders and do projection mapping which is apparently easier than it seems. There is a possibility in the future to maybe revisit the idea of movements and lights triggering musical shifts themselves and removing the need for a computer operator which could be fun!